Cloud storage overview

Cloud storage is a cloud computing model where the user stores data on the internet through a cloud provider. That provider will manage and operate the data for you as a service. That way the user doe not have to deal with the infrastructure, and it’s delivered on demand anytime the user needs it and can access it from anywhere in the world. Applications can access cloud storage through traditional storage protocols or directly through an API. Many providers offer services that can help collect, manage, secure, and analyze data at a very large scale.

We will be looking at a few of the top cloud providers showing some comparisons and showing how these cloud providers can benefit all sorts of different kinds of use cases. The three providers we’ll look into are Azure (Microsoft), AWS (Amazon), and GCP (Google).

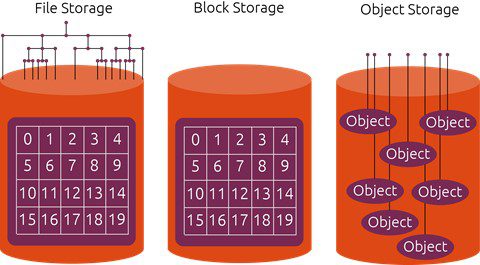

3 types of storage

Before we look into Azure, AWS, and GCP, the importance of the different types of storage is key. There are three types of cloud data storage: object storage, file storage, and block storage. Each offers their own advantages and have their own use cases.

Object

Object storage is designed for unstructured data in which the data is basically information object as opposed to a being a file or volume. There are retrieved by their globally unique identifier along with a collection of metadata and sometimes a bucket that sets security and access polices for the objects in it. The user can store any type of data as big or little that they want in this storage type.

File

File storage is like the file systems we use on our computers and servers which store data in organized tree-like hierarchal directories. Some implementations use a managed storage server with the service for compatibility with either Windows or Linux applications. Others will use proprietary file system and protocol implementations. File storage often uses a Network Attached Storage (NAS) server (a storage device connected to a network that allows storage and retrieval of data from a centralized location). This type is good for large content repos, development, media stores, or home directories.

Block

Block storage files are blocks of data which are evenly sized, and they each have their own address. These blocks however do not contain any metadata for providing more information about the block of data. Block storage is basically the same as a raw disk volume which you access with a storage area network (SAN). A SAN provides access to consolidated, block-level data storage. Block storage is best for files which are not changed often since a new change to a block will result in the creation of a new object.

Storage offerings

Azure Blob Storage

Azure blobs is Microsoft’s solution for object storage. It’s great for storing very large amounts of unstructured data. It’s good for serving images or files to a browser, storing files for access, streaming video, data backup and restore, and archiving. It provides SDK’s to connect to it’s API for lots of different languages. It also has 4 performance tiers: premium, hot, cool, and archive. That is for how frequently the user accesses their data, and pricing will be more or less depending on the tier. Blob storage is offered in a pay-as-you-go plan so the user only pays for the storage that they use.

AWS S3 Storage

Amazon’s object storage solution is AWS Simple Storage Service (S3). Like Azure blobs users can store unstructured data at a massive scale. S3 offers scalability, data availability, security, and performance. It has 4 performance tiers as well with different price points: standard, auto-tiering, infrequent access, and glacier for archiving. This storage option has many use cases such as data lakes, websites, mobile applications, backup and restore, archive, IoT devices, and big data analytics.

Google Cloud Storage

Google’s object storage solution is for companies of all sizes. It gives their customers the ability to store any amount of data, retrieve it as often as they’d like, and it provides unlimited storage with no minimum object size. It features low latency and high durability. They provide four performance tiers as well: standard, nearline, coldline, and archive.

These are just one storage offering that each company provides. For file storage:

- Azure: Azure files

- AWS: Elastic File System (EFS)

- GCP: Filestore

For block storage:

- Azure: Managed Disks

- AWS: Elastic Block Store

- GCP: Persistent Disk

Each provider also has other storage services besides these, however these are the main three services for each of the types of storage that we talked about so far. To learn more and find more information about the different storage options for Azure go to Azure Storage Services, for AWS go to AWS Storage Services, and for GCP go to GCP Storage Services.

Pricing

As far as pricing goes for each of the three providers, it can get pretty complicated when deciding which one is the most cost efficient. There is no set company that will always be the cheapest to go with. For all three providers however, they all have a pay as you plan which usually will be far cheaper than using an on-premises datacenter for storage. The user pays only for the storage capacity and services they use. And within each provider there are a lot of different options you can choose to have or not have which will affect the pricing of the service.

The best method when it comes to comparing prices of Azure, AWS, and GCP is to use each platform’s Pricing Calculator. Each platform created their own calculator where the user puts in the service they want to use, the capacity of storage they’ll need per month, the number of operations they plan to do per month, and so on. After you input the specs you plan to use for the service it will calculate and present a final estimation of how much it will cost you per month to use that service. So, when comparing price points when planning to use one of the providers it is important to know what you’ll need and then compare and contrast by using the price calculator for each platform. Here is an example of what some comparison estimations might look like:

| AWS | Azure | GCP | |

|---|---|---|---|

| Object Storage 100 GB Capacity, 100,000 Opterations |

S3 Standard $2.84 per month |

Blob Standard Hot $2.92 per month |

Standard Storage $2.84 per month |

| File Storage 100 GB standard storage, 1,000 GB infrequent access, 10 GB infeqent access requests |

Elastic File System $19.86 per month 1000 infrequent access requests, 20% frequently accessed |

Files $19.23 per month 100 GB Hot and 1000 GB Cool, 1% in metadata and snapshots |

Filestore $20.45 per month |

| Block Storage 1 disk instance, 100 GB, 2 snapshots per day |

Elastic Block Store $33.95 per month Standard SSD, 10 GB snapshots |

Managed Disks $11.00 per month Standard SSD, E10 (128 GB),10GB snapshots |

Persistent Disk $43.00 per month, Standard SSD |

On the left-hand side of the table it says what specs were inputted into each of the pricing calculators. Underneath the final prices is more specs that were specific to that pricing calculator that the other calculators might not of included. So, as you can see for object storage Azure is a little more expensive than the others, for file storage, Azure is a little cheaper than the others, and for block storage Azure is way cheaper than the others. However, there are a lot of different options and settings that will change the prices significantly. So Azure might not be cheaper than the others in certain scenarios of what the user might need if other operations were needed. That is why when a business or customer decides on using a cloud provider and pricing is very important, the user must have an idea of what they need in terms of storage capacity and operations so they can get accurate estimates of how much each platform will cost to use. Doing their own estimations and comparisons is crucial.

Businesses who benefitted

There are thousands of businesses that have benefitted from migrating to or using one of the three cloud providers we’ve talked about. Whether it be from those businesses on-prem storage solutions not being able to keep up with the amount of customers they were gaining, lowering costs significantly by moving to the cloud, or giving their employees less time to manage their data, there are many reasons for moving to cloud resources. There are some great examples of businesses that have benefitted in these ways. Listing one from each cloud platform and providing links to those stories:

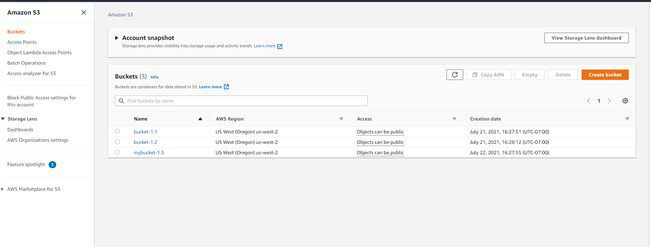

Connecting to storage API

In development being able to access and manipulate the data in storage is crucial. One way to do that is connecting to the storage API. I will show how it is done in AWS for S3 storage. First of all, an Amazon Web Services account is needed with access to the S3 storage service. Inside of Amazon S3 it will look like this:

You can create a bucket (container) in which your storage objects will go. As you can see there’s already three created in the picture above. Next we’ll need to connect to the storage API in our development environment. In this case were using Python in Visual Studio Code. The SDK that we’ll need to import is boto3.

In the folder where you create the project in your favorite CLI use the command “pip install boto3”. Now we can upload and download data to and from the S3 storage service in python.

import boto3

# Creating the low level functional client

s3_client = boto3.client(

‘s3’,

aws_access_key_id = ‘your access key’,

aws_secret_access_key = ‘your secret access key’,

)

s3_client.upload_file(‘aws-logo.jpg’, ‘bucket-1.1’, ‘aws-image.jpg’)

First we import the boto3 SDK, then we connect to the S3 client. However first you must go to the IAM management service under “Users”. Then go to the “Security credentials” tab and under “Access keys” click “Create access key”. This creates the access key and access secret to use in the s3 client function to connect to the API. The user also has the option to download that access key and secret to a csv file for later reference.

Next to upload the file that is in your current folder, the built in boto3 function s3_client.upload_file() is used. You put in the name of that file as it is in your folder, the name of the bucket you wish to import it to, and the file name you want it to be in the S3 storage bucket. Then simply run the python script and in the S3 dashboard you will see the file in the bucket.

For downloading a file from a bucket in S3 storage it is a similar process. Simply create the S3 client as we did before, then write the download file function:

s3_client.download_file(‘bucket name’, ‘source file name’, ‘destination file’)

As like before put in the bucket name, source file name, and destination file name which you wish it will be called on your machine. After running this script you will see the file you downloaded in your current directory where this file script is.

That is how we are able to connect to the AWS S3 storage service API using Python. Azure allows for a very similar way to connect to their Blob Storage API as well using “azure.storage.blob” API. To look at all the documentation for the python S3 API functionality go to Boto3 Documentation, and go to Azure SDK Documentation for the Azure documentation to connect to the Blob storage API.

Want more information?

Are you curious about how we can help you start your journey with Azure, AWS, and GCP? Or are you interested in learning about how iSoftStone can help you integrate cloud storage solutions into your workplace? Please contact us today!